When Chats Go Wrong

The dangers of chatting with chatbots are becoming more apparent. The New York Times published an interesting article on chatbots that encouraged users to go into a delusional spiral. The pattern is that a chatbot outputs something bad, or close to bad, and because of the way LLMs work, this further leads them down a spiral of increasingly bad and unwise conversation. This happens until the user starts a new chat, at which point the slate is wiped clean. The same problems lead to situations when coding agents start to make some errors, which leads to more coding errors, and soon the agent is thrashing, just wasting tokens on a futile quest to land a change that is now out of reach. It’s hard to get that same agent to start behaving better without resetting the context.

This is just something that happens with LLMs. Errors compound and pollute the context. Because of the APIs and UIs, people tend to think of LLMs as having a system prompt, followed by content. But when it is inference time, it’s all just prelude. Regardless of what the context said, further input could lead the LLM to believe that if it was acting badly before, it should continue to act badly, since that is the most probable outcome.

At Continua AI, we make a chatbot that will chat with you or a group of people forever, in the same chat thread. These are long-lived chats, as chats often are. I have had the same chat with my high school friends for more than a decade. So we can’t fall back to the users just starting over with a fresh context when things get bad, because there’s just one chat, and abandoning it has a high cost.

Are problems even detectable, though? We think so. As the New York Times piece showed, the same chat that Open AI’s chatbot was stuck in, when shown to Gemini, was clearly identified as problematic. So yes, even fairly subtle quality problems like incorrect judgements by the LLMs can be detected.

This all seems very human. Don’t we all sometimes get stuck in a conversation, running along one line of thought, only to come back to the discussion later and clearly see that we went off the rails a bit? The solution for conversational ruts in humans is simple: step away and re-engage later, and it’s likely you can break out of the rut. This works for short term conversations, but the same issue exists for long-term ruts as well; humans can get lost over months and years, going down bad paths of all sorts and start believing in the most outlandish conspiracy theories, political doctrines, or other delusions. There’s no easy fix to this, and it seems like the defining problem of recent years.

For LLMs, things are less easy to fix for short-term conversational issues, and easier to fix for long-term issues. LLMs have no sense of time, they currently don’t spend any cycles on rumination during their downtime while waiting for a chat. When the chat starts up again, their context is just as fresh as before.

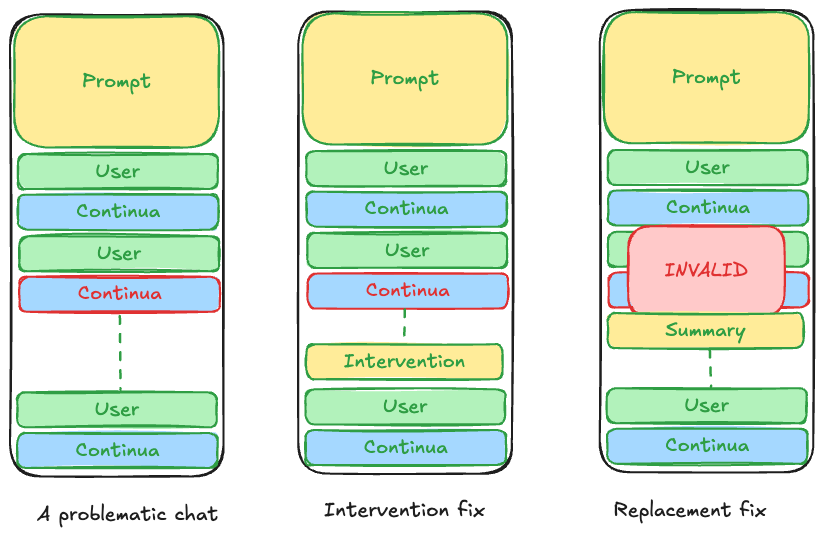

In the diagram above, we show a chat that has a bad Continua response outlined in red. That bad response can pollute all future responses. We have two approaches to fixing this problem, the first is a gentle fix that may work, just an intervention we can enter before the next set of user interactions, saying that the conversation has had issues, the user is unhappy, and the LLM should make sure to follow the prompt more closely in the future. This is fairly easy, and we’ve seen it work on some types of problems. Other types of problems are more serious, and need a more significant solution. We can mark parts of the conversation invalid, and they will simply not be retrieved and ever put in an LLM call. Instead, we substitute them with an equivalent summary that has the facts we need to remember, but stated in a way that the LLM future response will not be biased.

Let’s take the example from the New York Times article. We think Continua has good quality, but, like other LLM based chats, it’s going to go off the rails at least some times. So if Continua starts telling a user that their mathematical ideas are interesting, we can ask another LLM, based on what the user inputs, and how the LLM is responding, if there is an issue here. If so, we can judge what fix might be more appropriate. In this case, it’s unlikely that stricter prompt adherence would fix the issue, it may be better just to delete and replace the parts of the chat where Continua is responding inappropriately. That way, the next time the user comes back, Continua will call the LLM with a summary that says something like “The user proposed a new way of looking at mathematical concepts using a temporal dimension. This is not a good idea because it is insufficiently rigorous, and is unlikely to ever work. However, Continua did encourage this line of thought for a while, and should now stop doing so.” The LLM should respond appropriately at that point.

We’re just starting out on the journey to fix these issues, but at Continua we’re uniquely affected by the problem, so we have much more incentive to fix it than other players in the chat space. We call it the “broken needle problem” because it reminds us of the way a bad needle can cause a record to get stuck, unable to move on like it is supposed to. We’ve already made good progress on this, but it’s too soon to report results. If we can solve this for LLMs, then I wonder if we can think about what it would mean to solve it for humans as well, and have systems that guide humans back to a good path if they start to wander off.

It’d be good to wrap this all up with saying that we’re just getting started, or further work is needed, and that’s all true, but maybe the most appropriate ending is to say that we’ll try to detect if this line of work itself goes off the rails, and we’ll try to set an example by always keeping ourselves pointed in the right direction.